Summary

This article delves into the intricacies of using the robots.txt file effectively to bolster your SEO strategy. It offers essential insights that can help you navigate modern web dynamics while safeguarding your site`s visibility and security. Key Points:

- Leverage robots.txt for dynamic content management by integrating it with JavaScript frameworks and server-side rendering to tailor access based on user behavior.

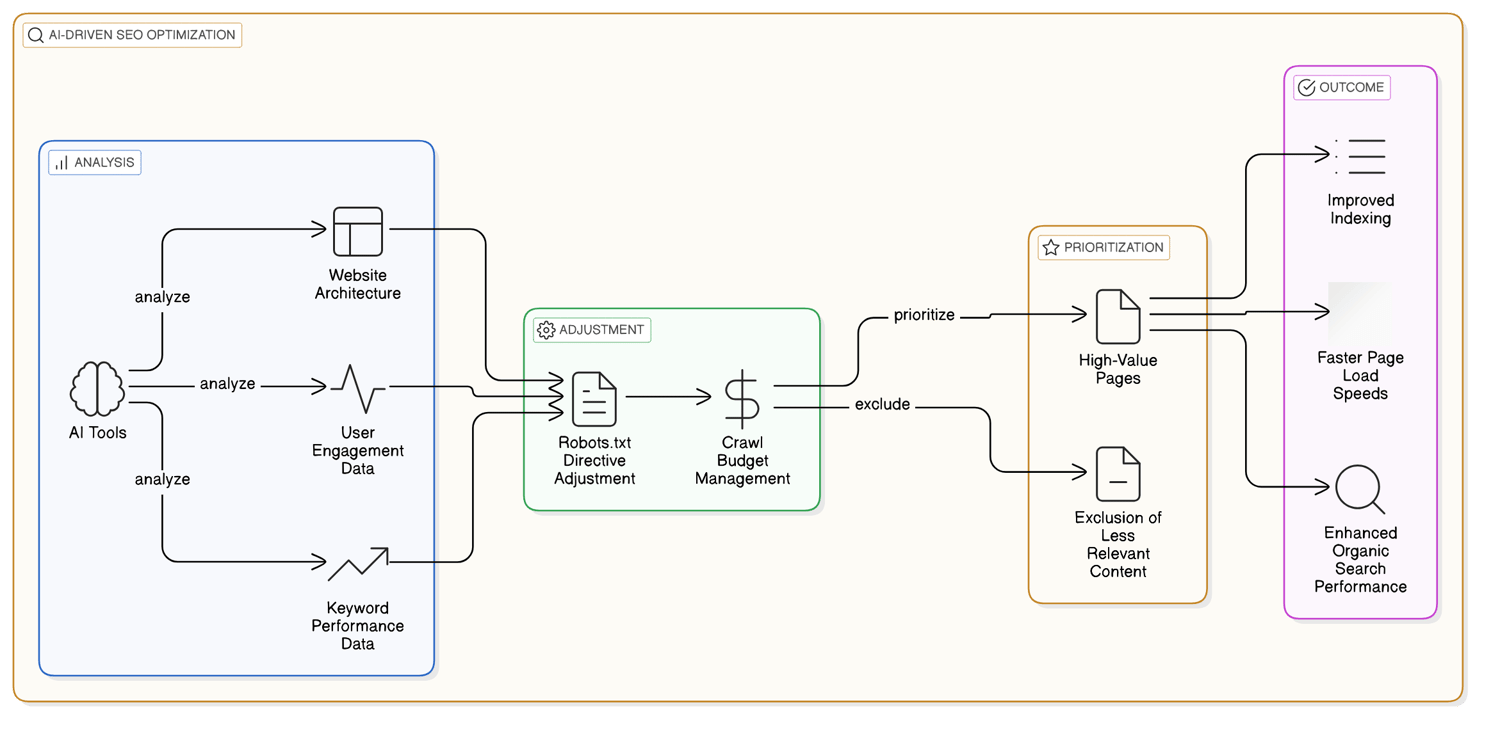

- Adapt to AI-powered crawlers by understanding their capabilities and how they interpret robots.txt, allowing for better control of your website`s indexing.

- Optimize discoverability through the strategic use of Schema Markup alongside robots.txt, ensuring search engines can access rich data without hindrances.

What is a robots.txt File and Why Should You Care?

My First Robots.txt Attempt: A Cautionary Tale

But then came the shocker: two days later, traffic dropped by 40%. “No way!” I yelled at my laptop. What did I mess up? My heart raced as I opened Google Search Console—only to find that I'd accidentally blocked all my product pages with a "Disallow: /" command.

“Seriously?! This can’t be happening,” I groaned. Every emotion hit me like a freight train—excitement turned to despair in just 48 hours. Friends told me it was “just SEO,” but in that moment, it felt like everything was on the line. How could something so simple go so wrong?

| Best Practice | Description | Benefits | Common Mistakes | Latest Trends |

|---|---|---|---|---|

| Block Unnecessary URLs | Use robots.txt to disallow crawling of low-value pages like admin sections or duplicate content. | Improves crawl efficiency and focuses search engine attention on valuable pages. | Blocking important pages by mistake, leading to lost traffic. | Implement AI-driven tools for better URL analysis. |

| Avoid Blocking Important Content | Ensure that key content is not inadvertently blocked in your robots.txt file. | Maintains visibility of essential pages in search results, enhancing SEO rankings. | Using blanket disallow rules which can affect entire sections of the site. | Regular audits with SEO tools to identify critical paths. |

| Manage Faceted Navigation | Handle faceted navigation correctly to prevent duplicate content issues by specifying what should be crawled and indexed. | Reduces redundancy in indexing, improving overall site authority and relevance. | Ignoring parameters that create duplicates, causing dilution of page rank. | Utilizing canonical tags alongside robots.txt for clarity. |

| Regular Reviews and Updates | Continuously review and update your robots.txt as your website grows or changes structure. | Ensures that new valuable content remains indexable while unnecessary URLs are blocked effectively. | Neglecting updates which leads to outdated blocking rules still being applied. | Adopting a proactive approach with scheduled reviews every quarter. |

| Testing with Google Search Console | Use testing tools like Google Search Console to verify the functionality of your robots.txt file after changes. | Confirms that intended blocks are working correctly without hindering important crawls. | Failing to test after modifications can lead to significant SEO setbacks. | Incorporating visual site maps for easier analysis during tests. |

The Turning Point: Understanding the Real Power of robots.txt

“Maybe we overlooked something,” she suggested hesitantly, glancing at me as if expecting an answer that didn’t exist. We all felt it—an underlying tension that hung in the air like a thick fog. Some began flipping through old notes while others stared blankly at charts displaying a stark 40% drop in traffic—I could practically hear their thoughts: How did we let this happen?

Time ticked on slowly, each second stretching into eternity as we collectively grappled with our mistake. Then silence enveloped us; no one knew what to say next or how to fix this unraveling mess. It felt like we were stuck in limbo, waiting for someone to break the ice—but nobody did.

How We Helped a Website Recover from robots.txt Errors

Frustration was palpable; Sarah waved her hands dismissively at our initial suggestions. “We’ve tried tweaking before with no success!” she exclaimed, glancing at the chart showing a staggering 40% drop in traffic over just three days.

Despite the skepticism, we pressed on and began drafting new rules for critical pages. Yet as Thursday approached, doubt crept back in—“Can these changes really turn things around?” someone muttered under their breath. The air was thick with uncertainty; nobody could confidently predict whether our efforts would bear fruit this time around.

Free Images

Free ImagesRobots.txt FAQs: Common Mistakes and Solutions

For instance, I've seen cases where site owners unintentionally block essential resources like stylesheets or JavaScript files. This mistake can seriously hinder how search engines render and index their pages. Imagine trying to get your content noticed, but it's all jumbled up because those crucial resources are off-limits! 😱

Another frequent pitfall is incorrect syntax in the robots.txt file. Using wildcards improperly might prevent access to content you actually want indexed—yikes! 💥 So what’s the solution? First off, double-check your directives to make sure they’re accurate. And hey, utilizing tools like Google Search Console can be a game changer; it helps you see how search engines interpret your rules.

Regularly auditing your robots.txt file is also vital for keeping everything on track. By staying proactive about these details, you’ll ensure that valuable content isn’t accidentally restricted from being indexed—leading to a more successful SEO strategy overall! 💪

Beyond the Basics: Advanced Robots.txt Strategies for SEO?

The robots.txt Dilemma: Balancing SEO and User Experience

Practical Implementation: Creating and Testing Your robots.txt File

In the world of SEO, understanding how to effectively use your robots.txt file can be a game-changer. This small but mighty file acts as a guide for search engine crawlers, telling them which pages to index and which ones to ignore. Implementing it correctly can help enhance your site's performance and visibility in search results.

I remember when I first started optimizing websites; I often overlooked this essential component. Many people don’t realize that even minor misconfigurations in the robots.txt file can lead to significant issues, such as blocking important content from being indexed. So let’s dive into creating and testing your own robots.txt file with some practical steps!

#### Step-by-Step Guide:

1. **Understand Your Needs**:

- Before you start writing your robots.txt file, take a moment to clarify what you want search engines to do with your site. Are there specific pages or sections you'd like them to skip? (It helps to list these out!)

2. **Create Your Robots.txt File**:

- Open a text editor (like Notepad or TextEdit) and start drafting.

- Use the following basic syntax:

User-agent: *

Disallow: /private-directory/

Allow: /public-directory/

- This example tells all bots not to crawl anything in `private-directory` but allows access to `public-directory`.

3. **Save Your File**:

- Save the document as “robots.txt” ensuring no additional extensions are added (like .txt.txt). It should be plain text!

4. **Upload the File**:

- Place this newly created file in the root directory of your website using an FTP client or via your hosting provider's control panel (for example, www.example.com/robots.txt).

5. **Test Your Robots.txt File**:

- Head over to Google Search Console's "Robots Testing Tool." Here you can input URLs from your site and see if they’re blocked or allowed according to your settings.

- This is now one of the most crucial steps! Make sure everything looks right—it's easy for mistakes like typos or incorrect paths to slip through.

6. **Monitor Changes Regularly**:

- After making changes, keep an eye on how they affect indexing through Google Search Console reports.

- Consider setting reminders every few months to review any updates needed as your website evolves.

#### Advanced Tips:

If you're looking for more advanced applications of your robots.txt file, consider temporarily blocking access during maintenance periods while keeping critical pages available for crawling—this ensures that users won’t encounter broken links while still allowing search engines access where needed.

Also, if you’re running into frequent issues with unwanted crawls on specific parameters or query strings, consider adding directives specifically tailored for those scenarios.

And remember: If you ever find yourself unsure about whether something should be disallowed or allowed, err on the side of caution—you can always adjust later based on data gathered from analytics tools! Happy optimizing!

Future-Proofing Your robots.txt: What`s Next?

Conclusion: Mastering robots.txt for SEO Success

Think of it this way: every adjustment you make in your robots.txt can either enhance or hinder your online performance. Regular audits are essential—not only to align with changes in your website but also to stay ahead of SEO trends that could affect visibility. Are you currently keeping pace with these changes? If not, now is the perfect time to reassess and refine your approach.

Moreover, utilizing testing tools not only saves you from potential pitfalls but empowers you to optimize resources effectively without compromising critical pages. So take action today! Review your current setup, implement these best practices, and witness firsthand how a well-optimized robots.txt file can propel your SEO success forward. The landscape continues to shift—how will you ensure you're on the cutting edge?

Reference Articles

Robots.txt for SEO: The Ultimate Guide - Conductor

Learn how to help search engines crawl your website more efficiently using the robots.txt file to achieve a better SEO performance.

A Guide To Robots.txt: Best Practices For SEO - Search Engine Journal

Unlock the power of robots.txt: Learn how to use robots.txt to block unnecessary URLs and improve your website's SEO strategy.

What Is A Robots.txt File? Best Practices For Robot.txt Syntax - Moz

SEO best practices for Robots.txt. Make sure you're not blocking any content or sections of your website you want crawled. Links on pages blocked by robots.

Robots.txt Best Practice : r/SEO - Reddit

I'm looking for the best format robots.txt file for a service website. I want service pages to rank and for conversions via calls/contact ...

Understanding and Optimizing Robots.txt For SEO [Best Practices]

The robots.txt file is an essential tool for any SEO strategy. Our experts explain why and how to configure it in detail.

Robots.txt Best Practices for Ecommerce SEO - Prerender.io

6 Best Practices to Get the Most Out of Your Ecommerce Robots.txt Files · 1. Don't use 'Blanket Disallow' Rules · 2. Manage Faceted Navigation ...

Robots.txt and SEO: Complete Guide - Backlinko

What is Robots.txt? And how can it help your SEO? I cover everything you need to know about Robots.txt in this super detailed blog post.

SEO best practices for robots.txt - FandangoSEO

Here's a complete guide on using the robots.txt file to boost your SEO—everything you need to know about robots.txt and some best practices.

ALL

ALL Seo Technology

Seo Technology

Related Discussions